http://android.git.kernel.org/?p=platform/frameworks/base.git;a=commitdiff;h=d5770917a50a828cb4337c2a392b3e4a375624b9#patch12

Before we delve into the change, there are basically two types of DRM schemes:

1. All of the data was stored under a uniform encryption layer (which is defined as DecryptApiType::CONTAINER_BASED in drm framework currently);

2. Encrypted data is embedded within a plain-text container format, so it can be decrypted packet by packet, thus also applicable for progressive download and streaming.

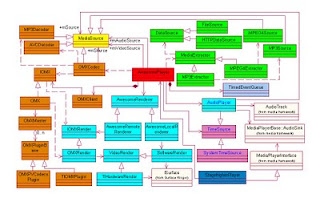

The entire commit is mainly composed of the following parts:

1)Extension in DataSource interface:

+ // for DRM

+ virtual DecryptHandle* DrmInitialization(DrmManagerClient *client) {

+ return NULL;

+ }

+ virtual void getDrmInfo(DecryptHandle **handle, DrmManagerClient **client) {};FileSource implements those APIs, where it communicates with DRM service to open a decryption session. For CONTAINER based protection (e.g. OMA DRM v1), FileSource intercepts readAt() function and return the decrypted data transparently to its client -- file parser, which is thereby ignorant of the underlying protection mechanism.

2)Extension in MediaExtractor interface:

+ // for DRM

+ virtual void setDrmFlag(bool flag) {};

+ virtual char* getDrmTrackInfo(size_t trackID, int *len) {

+ return NULL;

+ }The above APIs are used to retrieve context data, such as Protection Scheme Information Box in mp4/3gp files, to properly initialize the corresponding DRM plugin.3)DRMExtractor and the DRM format sniffer:

SniffDRM is registered to identify DRM-protected files and its original container format. As mentioned, for CONTAINER_BASED encryption, FileSource handles data decryption transparently for parsers. For the other scheme, which are encrypted with sample/NAL as the basic unit, DRMExtractor is created to wrap the original MediaExtractor, to decrypt data after reading each sample/NAL from the original extractor. In this way, DRM related stuff is separated from actual file parsers.

However, as DRM is usually an extension based on the underlying container format, so it may not be as easily decoupled from file parser when it comes to other protection schemes. For example, Microsoft's PIFF extension to ISO base media format requires IV for each sample, and details of sub-sample encryption info if applicable, etc. Besides, it also imposes duplicate logic in DRM service to recognize the original container format for non-CONTAINER_BASED encryption.

4)Misc:

-changes in AwesomePlayer for rights management;

-changes in MPEG4Extractor to retrieve "sinf";

-etc.